Stemmatology (a.k.a. stemmatics) studies relations among different variants of a document that have been gradually built from an original by copying and modifying earlier versions. The aim of such study is to reconstruct the family tree of the variants.

We invite applications of established and, in particular, novel approaches, including but of course not restricted to hierarchical clustering, graphical modeling, link analysis, phylogenetics, string-matching, etc.

The objective of the challenge is to evaluate the performance of various approaches. Several sets of variants for different texts are provided, and the participants should attempt to reconstruct the relationships of the variants in each data-set. This enables the comparison of methods usually applied in unsupervised scenarios.

DataThe data is available in plain text format. It is under consideration whether some more pre-processed formats will be made available in order to reduce the effort of the participants. In any case, the plain text versions will remain available, and can be used in the challenge — since pre-processing is likely to lose some information, some methods may benefit from using the original data. There is one primary data-set which is used to determine the primary ranking of the participants. The total number of points earned in all of the data-sets (excluding the primary one) will be used as a secondary ranking scheme. For more information on the ranking schemes, see the Rules.

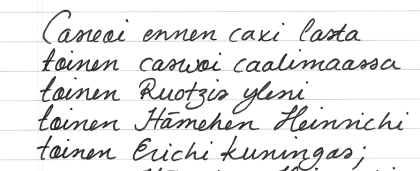

About the Nexus format: The conversion from plain (aligned) text to the Nexus format was performed by ordering at each ‘locus’ (a place in the text) the words that appear in the locus in alphabetical order, and by assigning to each variant a symbol (A,B,C,…). Thus, if three different variants of a given word appear in the texts, then the symbols A,B, and C appear in the Nexus file at the same locus. Missing words are replaced by a question mark (?). The Nexus files are directly compatible with, for instance, the PAUP* software. (See the Results.) Primary Data-Set: HeinrichiThe primary data-set is a result of an experiment performed by the organizers and 17 volunteers (see Credits). The original text is in old Finnish, and is known as Piispa Henrikin surmavirsi (‘The Death-Psalm of Bishop Henry’).

New: The correct stemma and an evaluation script are available at the Causality workbench. Data-Set #2: Parzival (Validation Data)This is also an artificial data-set made by copying a text by hand. The correct solution will be made available during the challenge in order to enable self-assessment. This data-set will not affect the final results. '' If vaccilation dwell with the heart, the soul will see it. Shame and honour clash with the courage of a steadfast man is motley like a magpie. But such a man may yet make merry, for Heaven & Hell have equal part in him. ... '' The text is the beginning of the German poem Parzival by Wolfram von Eschenbach, translated to English by A.T. Hatto. The data was kindly provided to us by Matthew Spencer and Heather F. Windram.

Data-Set #3: Notre BesoinAnother artificial data-set. The text is from Stig Dagerman’s, Notre besoin de consolation est impossible à rassasier, Paris: Actes Sud, 1952 (translated to French from Swedish by P. Bouquet), kindly provided to us by Caroline Macé. '' Je suis dépourvu de foi et ne puis donc être heureux, car un homme qui risque de craindre que sa vie ne soit errance absurde vers une mort certaine ne peut être heureux. ... ''

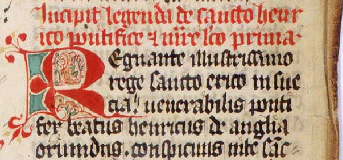

Data-Set #4: Legend of St. HenryThis is a real data-set. The text is the Legend of St. Henry of Finland in Latin, written by the end of the 13th century at the latest. The surviving 52 versions are provided, some of which are severely damaged and fragmentary.

ProceduresThere are several data-sets included in the challenge. Some of them are real transcribed medieval manuscripts, some artificial (but hand-copied) ones. The data-sets are made available in (at least) two phases. Correct answers to some of the first phase data-sets will be announced before the final submission deadline in order to enable self-assessment by the participants. These data-sets will not affect the final results. Submissions are made in groups (of size one or more persons). Each group should submit at most two solutions. If more than two solutions are submitted, the last two before the deadline are accepted. A person can belong to at most two groups. (So a person can contribute to a maximum of four solutions.) EvaluationFor the artificial data-sets, for which there is a known ‘ground-truth’ solution, the difference between the proposed and correct solutions is evaluation. The exact criterion is open for discussion among participants (and other interested parties). The current scoring function is based on distance orders among triplets (for details, see Example) For the real data-sets, there is no known correct solution. Therefore, EBS¹ will be used: the ‘owner’ of the data-set will be asked to estimate whether the proposed solution is plausible and useful from his/her point of view. ¹ EBS: endorphin-based scoring Ranking SchemesThere are two ranking schemes.

The organizers reserve the right to alter the rules of the challenge at any point. RestrictionsAnyone who has been in contact with some of the data-sets, or has otherwise obtained inside information about the correct solution should obviously not enter the challenge as regards the data-set(s) in question. However, participation is allowed as regards the other data-sets. The data-sets are provided only for the use in the challenge. Further use of the data requires an explicit permission by the organizers and the original providers of the data. Results

*) The entries below the horizontal line are added for comparison purposes by the organizers, and, therefore, not included in the competition. **) RHM06 = (Roos, Heikkilä, Myllymäki, “A Compression-Based Method for Stemmatic Analysis”, ECAI-06). ***) Data #4 is ‘real’ and no correct solution is known. The winner in the primary ranking (Data #1) is Team Demokritos (click on the team title in the table for more information). The secondary ranking is based on Data #3 and Data #4 for which the winner is Rudi Cilibrasi who’s submission was the best for Data #3. Neither submission was “plausible and useful” for Data #4 (as judged by a domain expert, hence no points for anyone).

|

Some of the copied variants are ‘missing’, i.e., not included in the given data-set. The copies were made by hand (pencil and paper). Note that the structure of the possible stemma is not necessarily limited to a bifurcating tree: more than two immediate descendants (children) and more than one immediate predecessor (parents) are possible.

Some of the copied variants are ‘missing’, i.e., not included in the given data-set. The copies were made by hand (pencil and paper). Note that the structure of the possible stemma is not necessarily limited to a bifurcating tree: more than two immediate descendants (children) and more than one immediate predecessor (parents) are possible. For ease of use, the texts are aligned so that each line in the file contains one word, and empty lines are added so that the lines match across different files (e.g. the 50th line in each file contains the word pontificem unless that part of the manuscript is missing or damaged). The text is roughly 900 words long.

For ease of use, the texts are aligned so that each line in the file contains one word, and empty lines are added so that the lines match across different files (e.g. the 50th line in each file contains the word pontificem unless that part of the manuscript is missing or damaged). The text is roughly 900 words long. Go to

Go to